This post is part 1/5 of my Data-Driven Code Generation of Unit Tests series.

At Morningstar, I created a multi-language, cross-platform performance analytics library which implements both online and offline implementations of a number of common financial analytics such as Alpha, Beta, R-Squared, Sharpe Ratio, Sortino Ratio, and Treynor Ratio (more on this library later). The library relies almost exclusively on a comprehensive suite of automated unit tests to validate its correctness. I quickly found that maintaining a nearly-identical battery of unit tests in three different programming languages was a chore, and I had a hunch that I could use a common technique to deal with this problem: code generation.

The basic ideas behind the approach are quite straightforward. The first idea is one of language independence – a given calculation, given a known set of inputs, must produce the same output (allowing for rounding error), regardless of programming language. Therefore, a unit test for the implementation of Alpha in C# should be nearly identical in function (and remarkably similar in form) to a unit test for the implementation of Alpha in Java. Perhaps this means that we don’t need to write the unit test twice; we can have the computer perform the translation for us.

The second idea is one of calculation similarity. Financial performance analytics tend to follow a common pattern: they all take in one to three streams of returns (security, benchmark, risk-free rate); they are almost all aggregate functions; most (but not all) can be implemented in both online and offline forms; and many support annualization. The code for the unit test for Beta looks remarkably like the code for the unit test for Alpha; the only significant difference is the expected result. Therefore, if we can encode only the differences among the calculations (e.g. their expected results) in some sort of data file, perhaps we can use code generation for the vast majority of the unit tests for the calculation library.

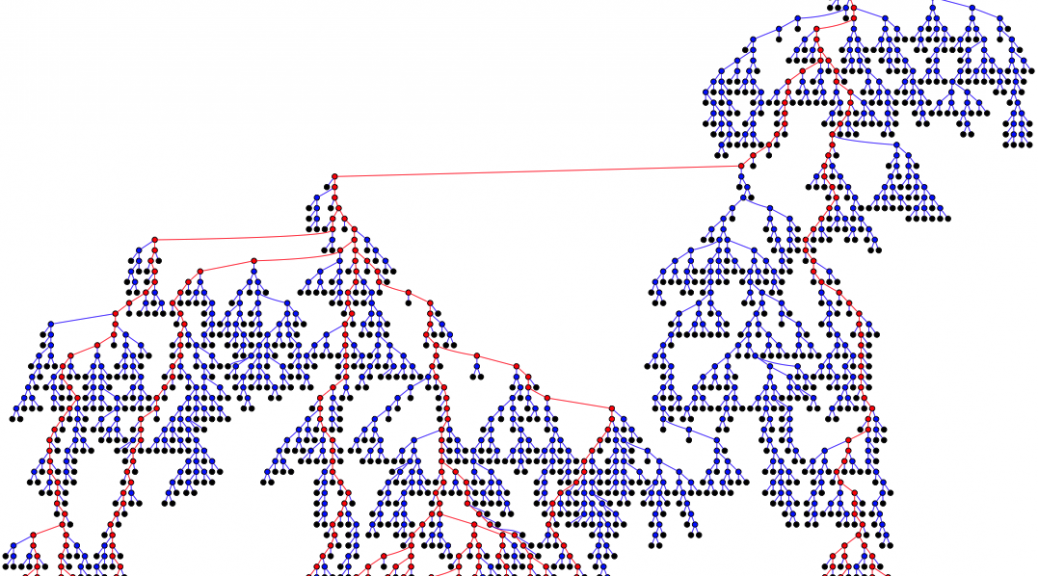

My hunch paid off. In the end, I had a single CSV file which contained all the important differences among the calculations (e.g. their expected values). The build process uses this CSV file to code generate the entire unit test framework in C++ (using CMake, Jinja2, and the Boost Unit Test Framework), Java (using Apache Maven, StringTemplate, and JUnit), and C# (using MSBuild, T4 Text Templates, and the Microsoft Unit Test Framework for Managed Code). I was guaranteed that every single calculation in every language produces the same result given the same input. I found language-specific bugs (typically typos) in the performance analytics library. I found language-specific bugs in previously-existing libraries at Morningstar (fortunately these were niche languages that weren’t actively used in products). I learned a lot about differences in templating systems for code generation (Jinja2 and T4 were pleasant; StringTemplate was much less so) and using code generation in build systems (Maven is a real pain; SBT is probably a lot nicer). Furthermore, I was able to use the same metadata file and code generation tools to power binding and wrapper libraries around the code performance analytics library (more on this later).

Future posts in this series will explain how I implemented data-driven code generation of unit tests in each of the above programming languages.

I’d love to hear feedback from you if you found this useful, or other places where you’ve applied similar techniques!